Trust and Respect: The pillars of our work at WAIYS

In a world where artificial intelligence is increasingly present, we are faced with a multitude of questions, opportunities and risks. As a company committed to the conscious use of AI technology, it is essential for us at WAIYS to consider not only the technological aspects of AI, but also the social and ethical implications that come with it. Social interaction and emotional intelligence are essential components of human interactions. When we talk about AI, we need to ask ourselves how we can ensure that these aspects are also preserved in the interaction between humans and machines. To achieve this, we have firmly anchored fundamental values in our own way of working and corporate culture. Our values at WAIYS form the foundation of our work and our decisions. We live trust and respect.

How can trust in AI be implemented? Trust in AI comes from transparency and auditability. We implement robust security measures to ensure the integrity and reliability of our AI systems.

Can AI be respectful? Our goal is to consistently respect the human dignity and rights of all users in AI development. This begins with the design of AI systems that protect privacy and give users control over their data. Our aim is to guarantee the data sovereignty of our users in order to ensure the privacy and confidentiality of the personal information processed.

Our defined standards for WAIYS guide us in developing and deploying AI technology that is not only advanced, but also based on transparent principles to ensure responsible use and protect the rights of our users.

Biased Information and Algorithmic Biases: A look at our responsibilities

Biased information refers to data or information that is distorted due to biases, assumptions or unequal representation. These biases can manifest themselves in different ways, whether through unequal representation of certain groups or through the distortion of data due to unconscious bias.

We acknowledge that algorithmic biases can occur in AI systems when they evolve from the underlying data and subsequently manifest themselves in the parameters. This can lead to discriminatory outcomes that disadvantage certain groups or reinforce biases. At WAIYS, we work with clients from different cultures and contexts to counteract this problem right from the AI training stage.

Our product testing enables us to identify such biases, whereupon we take proactive measures to eliminate them.

From Start to Finish: The transformation from skepticism to curiosity in our workshops

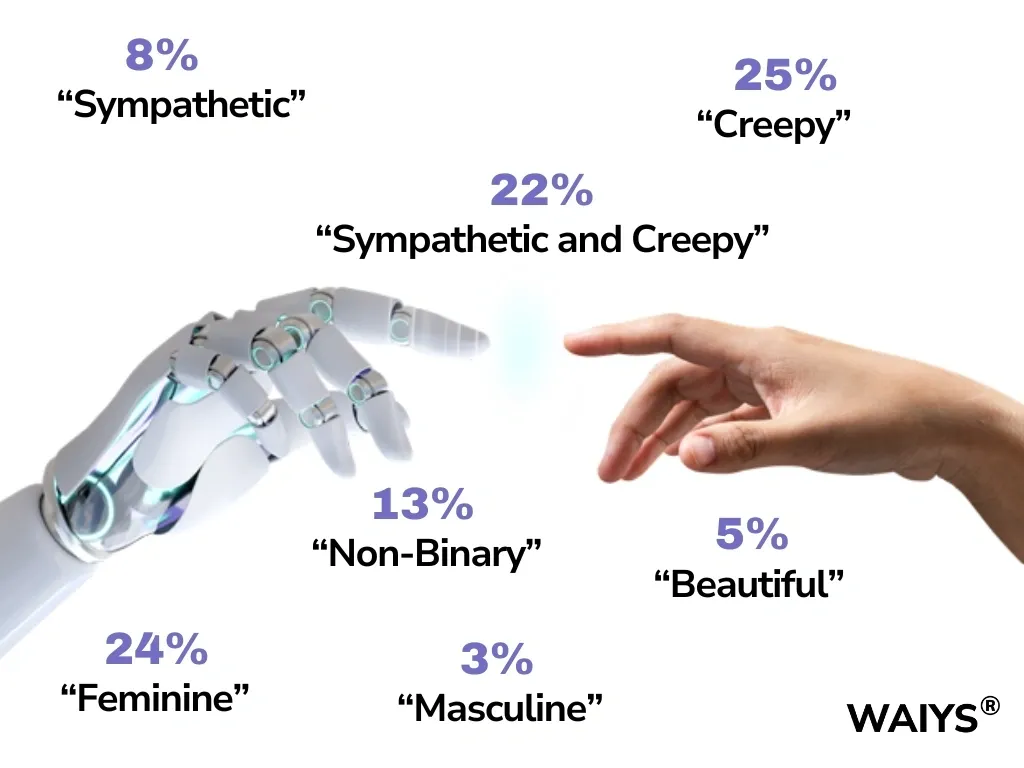

One phenomenon observed during our Capo Caccia workshops was the initial reluctance of many participants to interact with our humanoid robot Ameca. The following diagram illustrates the result of the survey conducted during our workshop series in Capo Caccia:

These ratings show that Ameca was mainly perceived by the workshop participants as scary, but also likeable. In addition, Ameca tended to be classified as female or non-binary.

The interaction with Ameca during the workshops aroused great enthusiasm and interest among the participants. Many were impressed by Ameca’s human-like behavior and natural movements. The opportunity to interact directly with an advanced humanoid robot gave the participants new insights into the potential and challenges of modern robotics.

At WAIYS, we ask ourselves how we can overcome hurdles and build trust. Through education and transparent communication, we break down barriers and create trust.

The following video shows the final event at which Ameca danced with a course participant.